Types of data syncs

Batch syncs

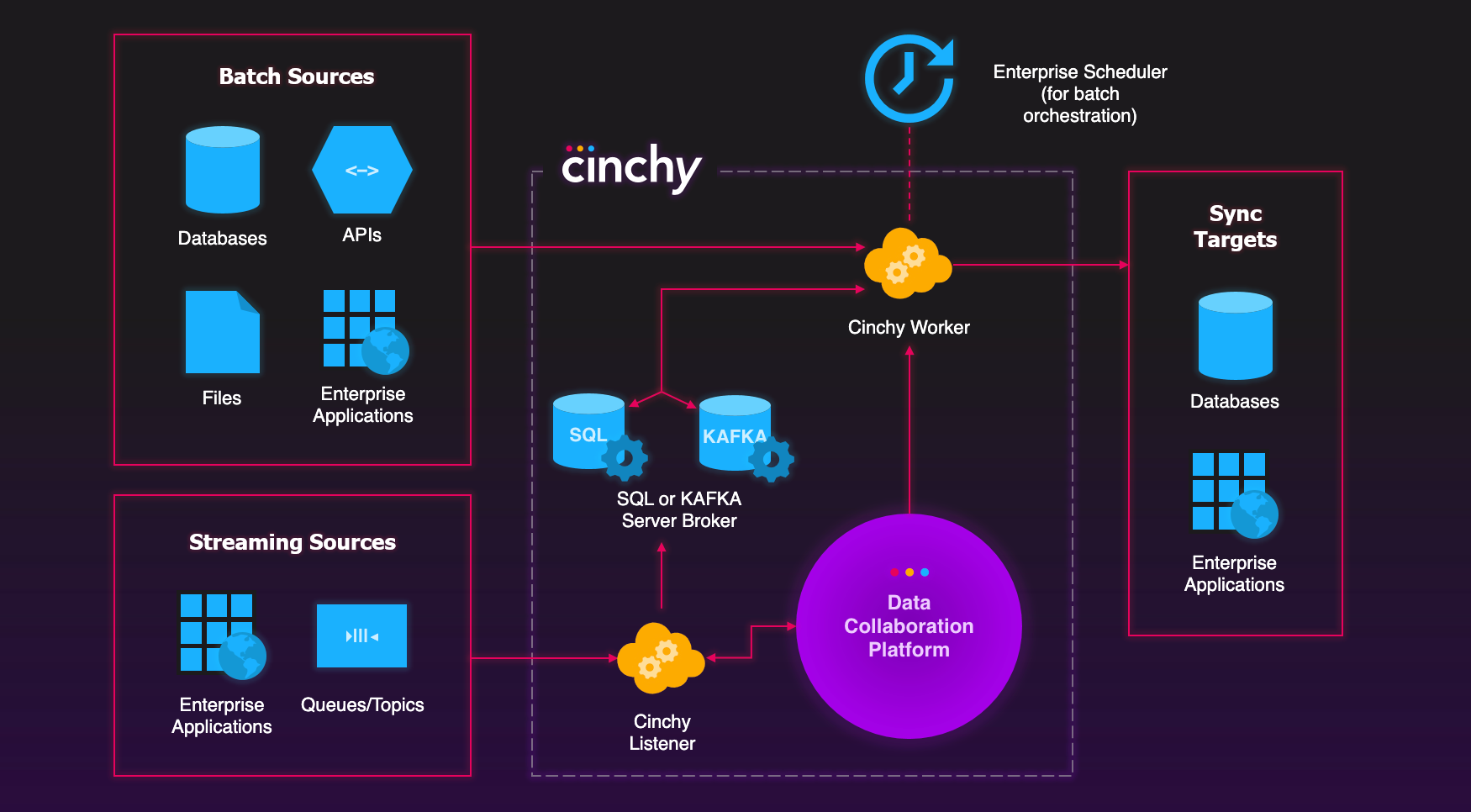

Batch syncs work by processing a group or a ‘batch’ of data all together rather than each piece of data individually. When the data sync triggers, it will compare the contents of the source to the target. The Cinchy Worker will decide to add, delete, or update data. You can run a batch sync as a one-time data load operation, or you can schedule it to run periodically using an external Enterprise Scheduler

A batch sync is ideal in situations where the results and updates don’t need to occur immediately but they can occur periodically. For example, a document that's reviewed once a month might not need an update for every change.

Execution flow

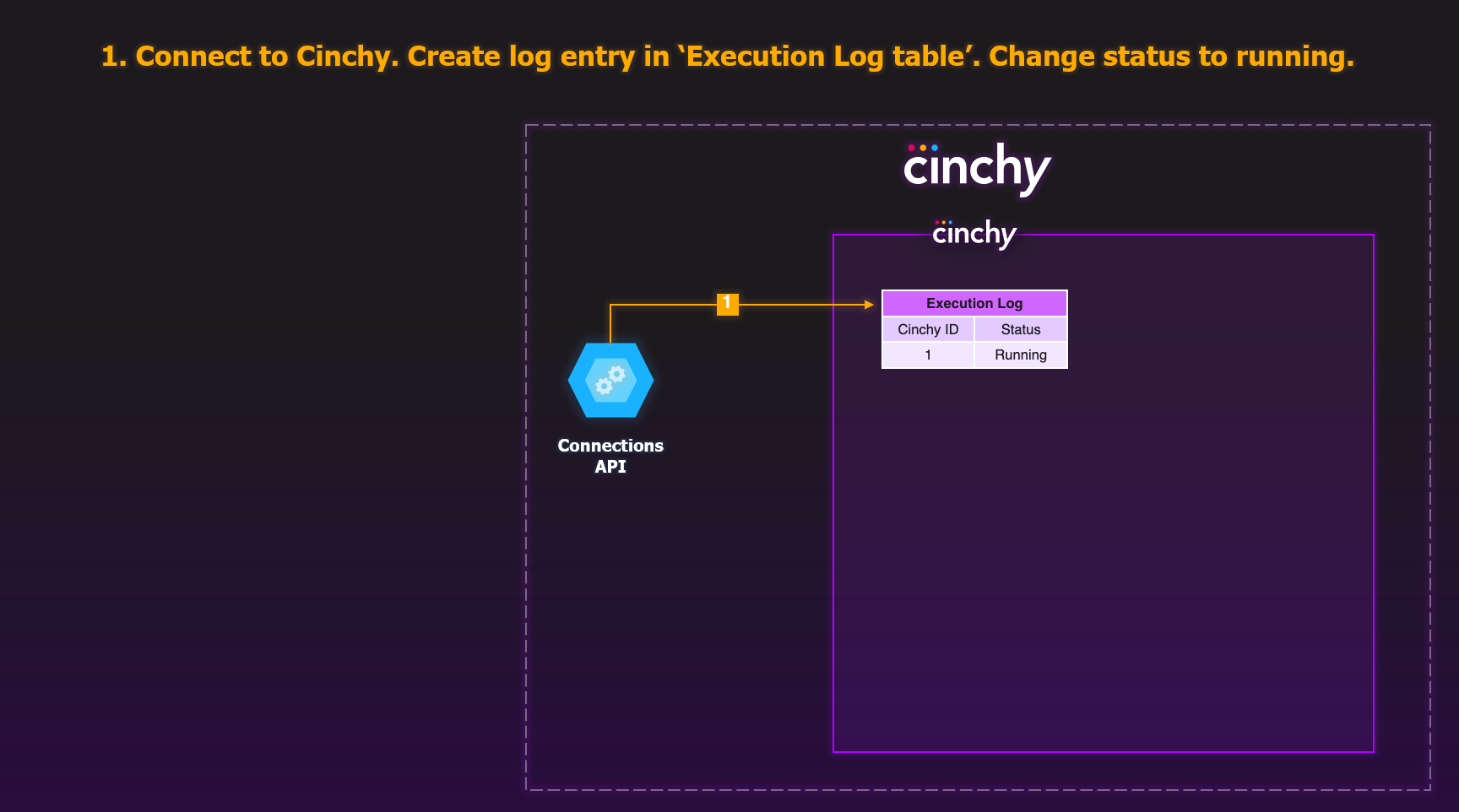

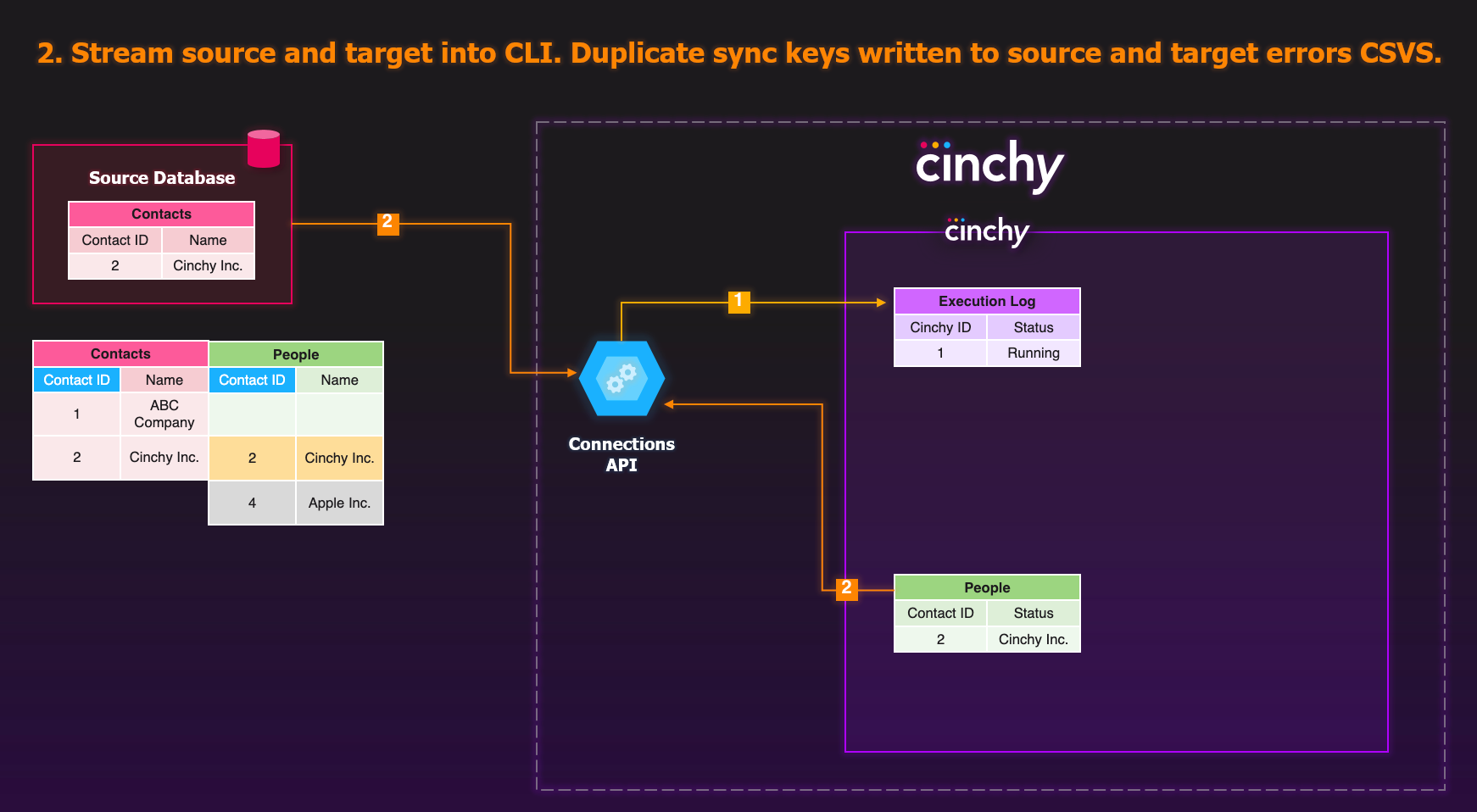

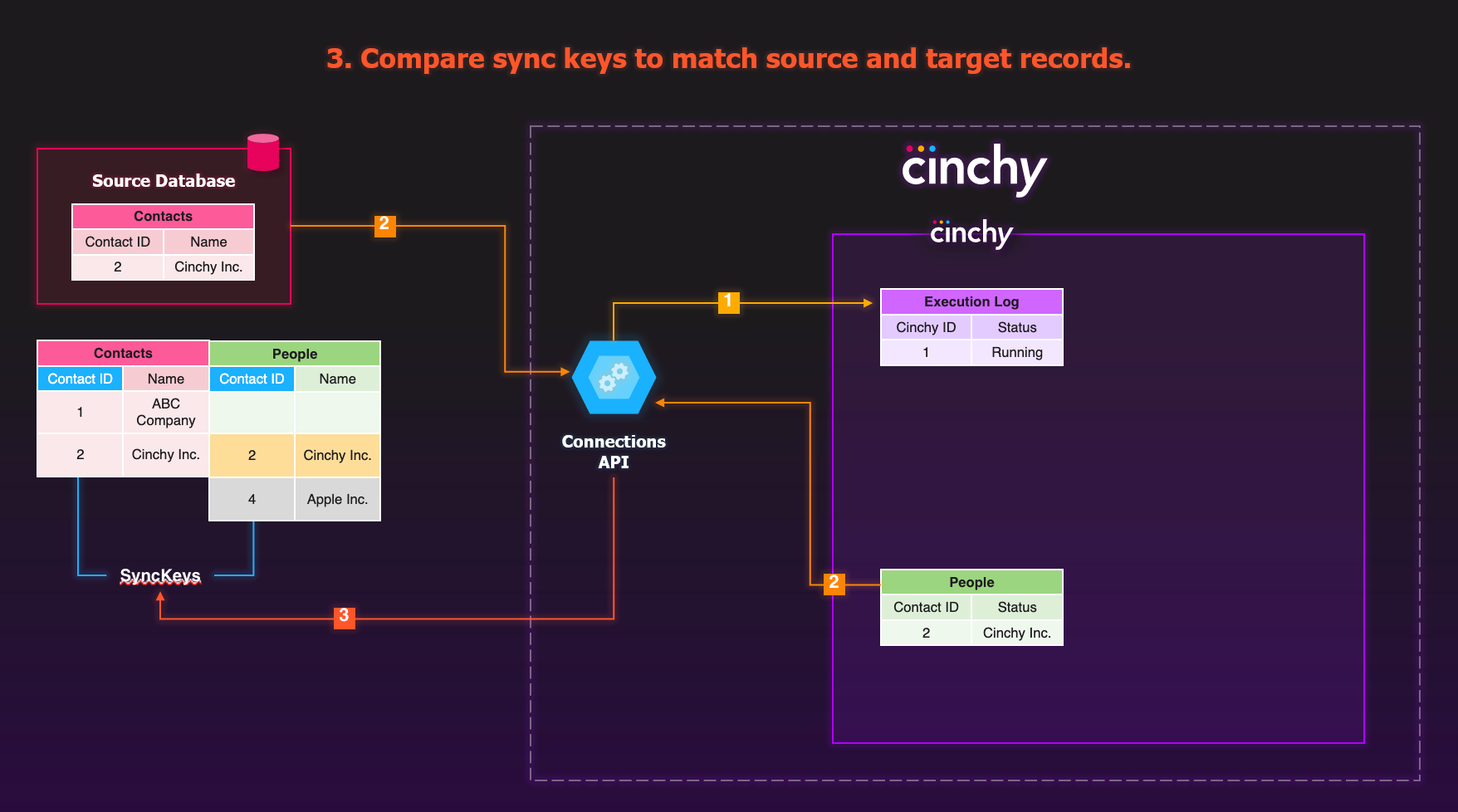

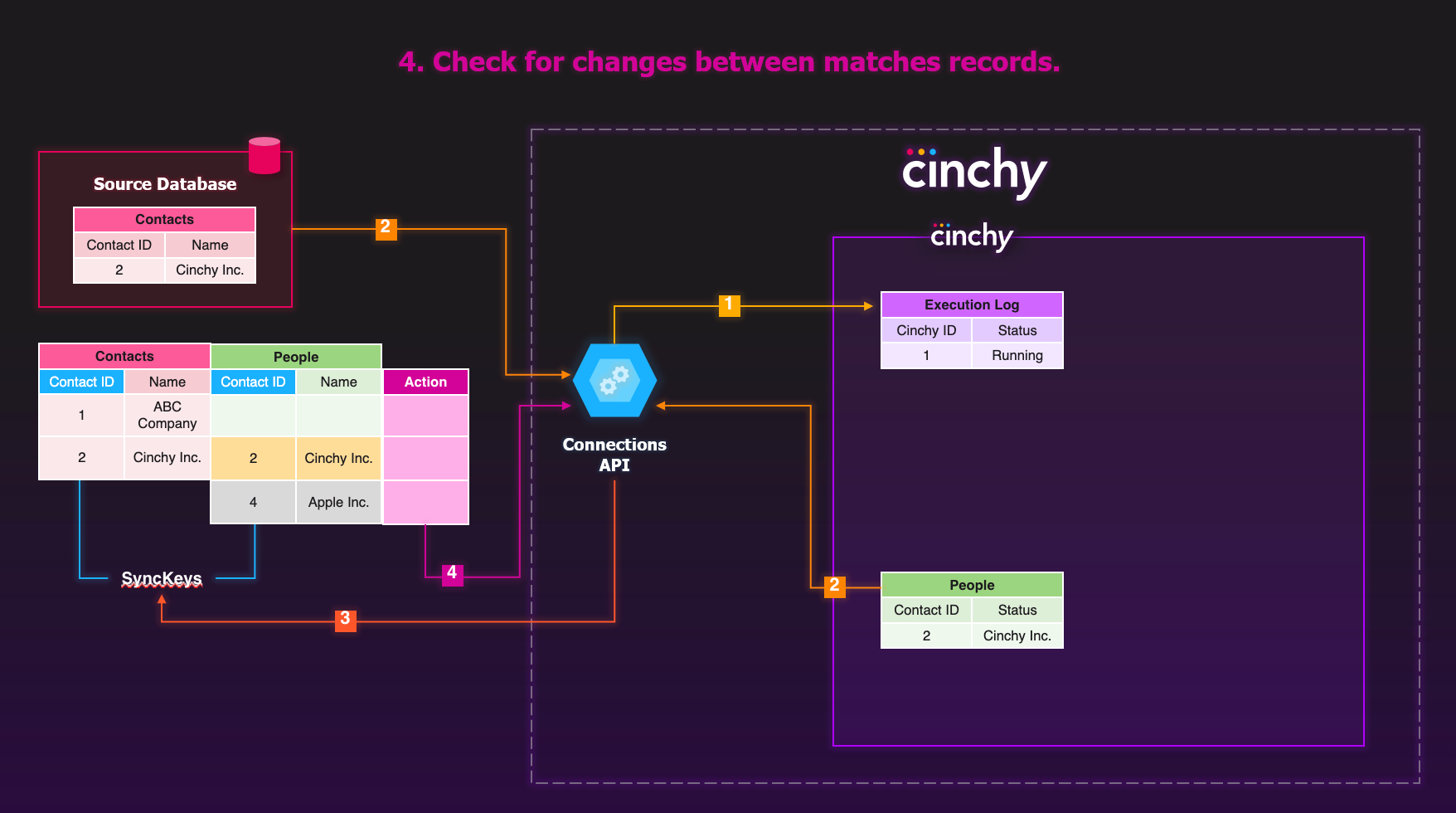

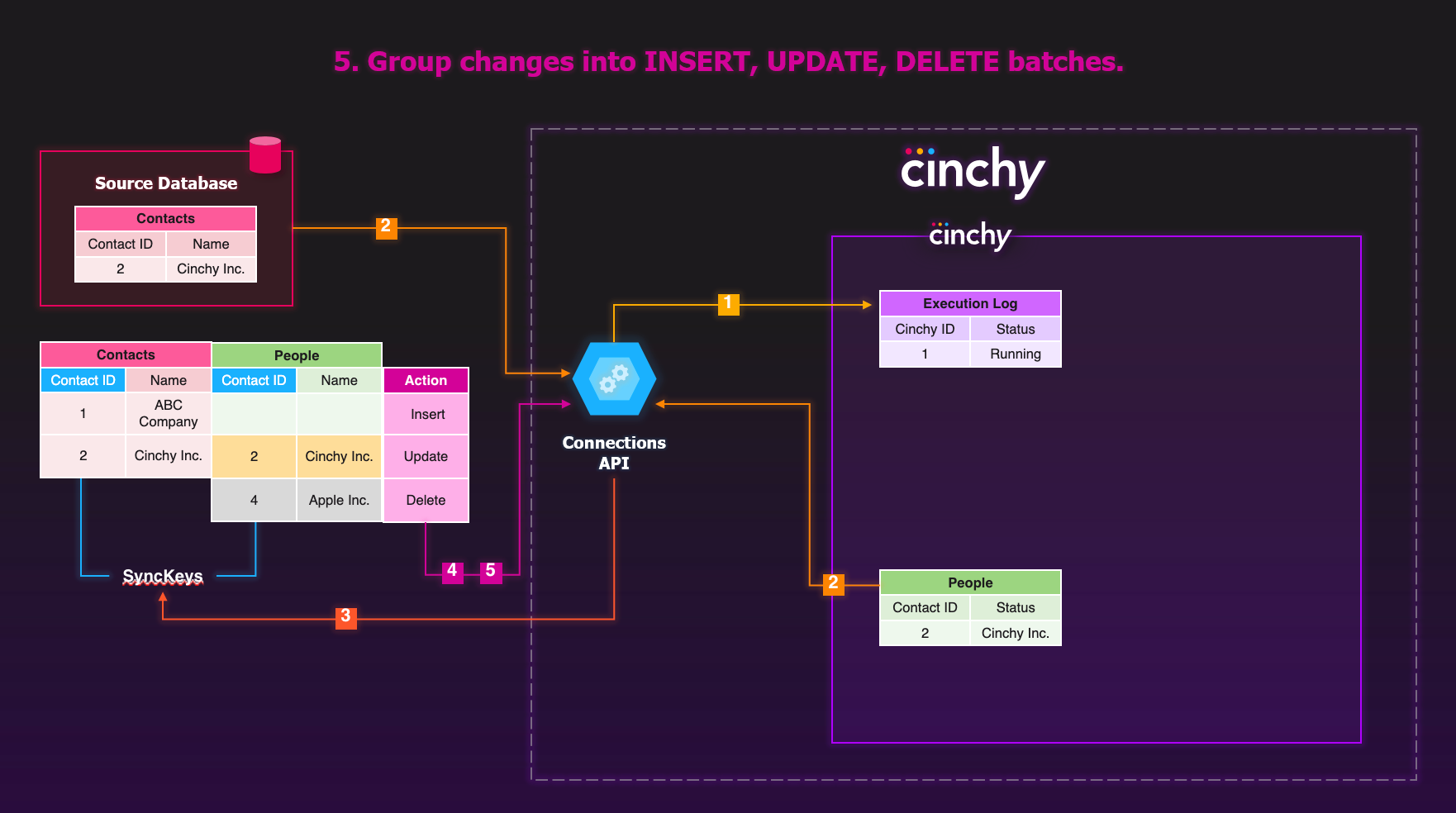

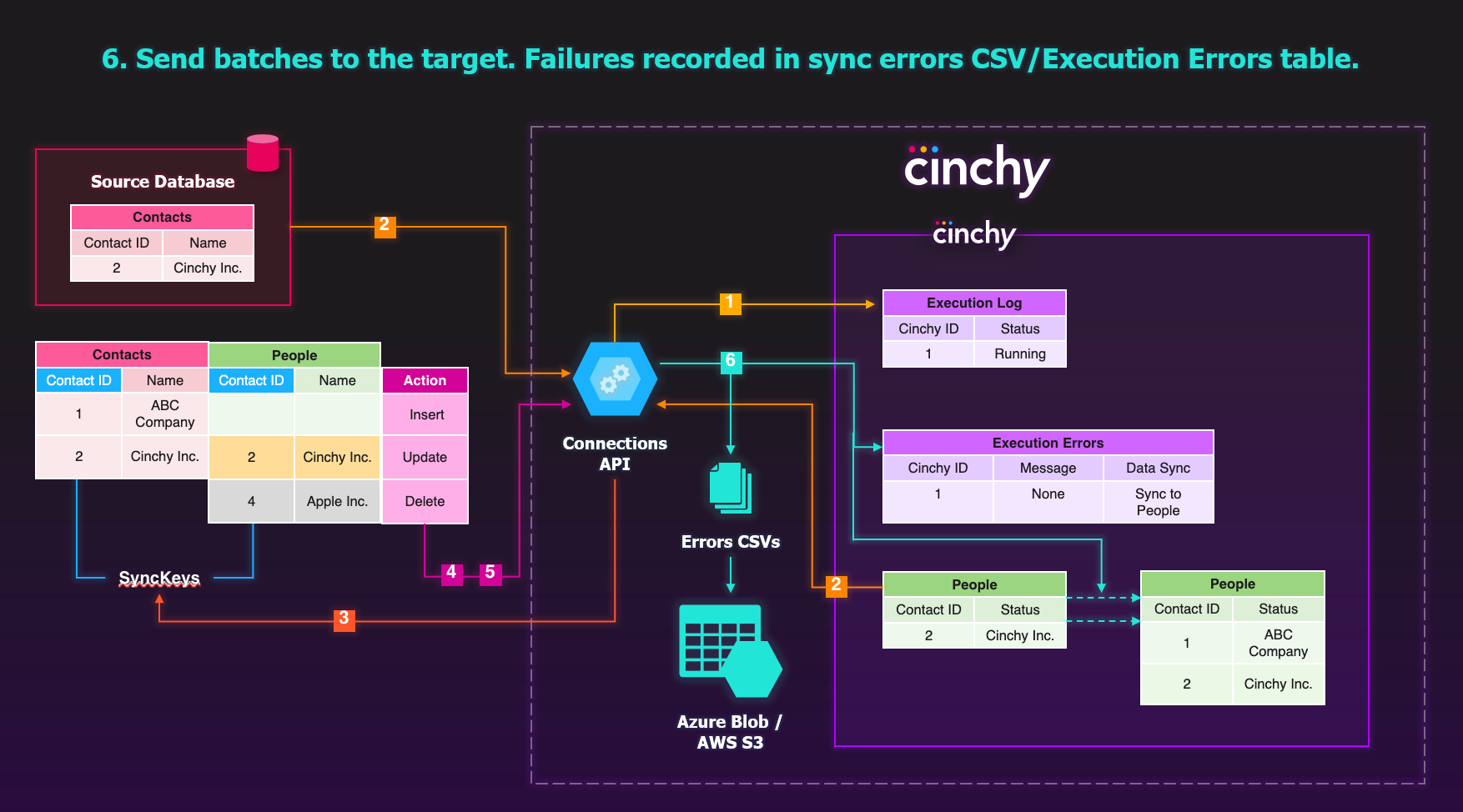

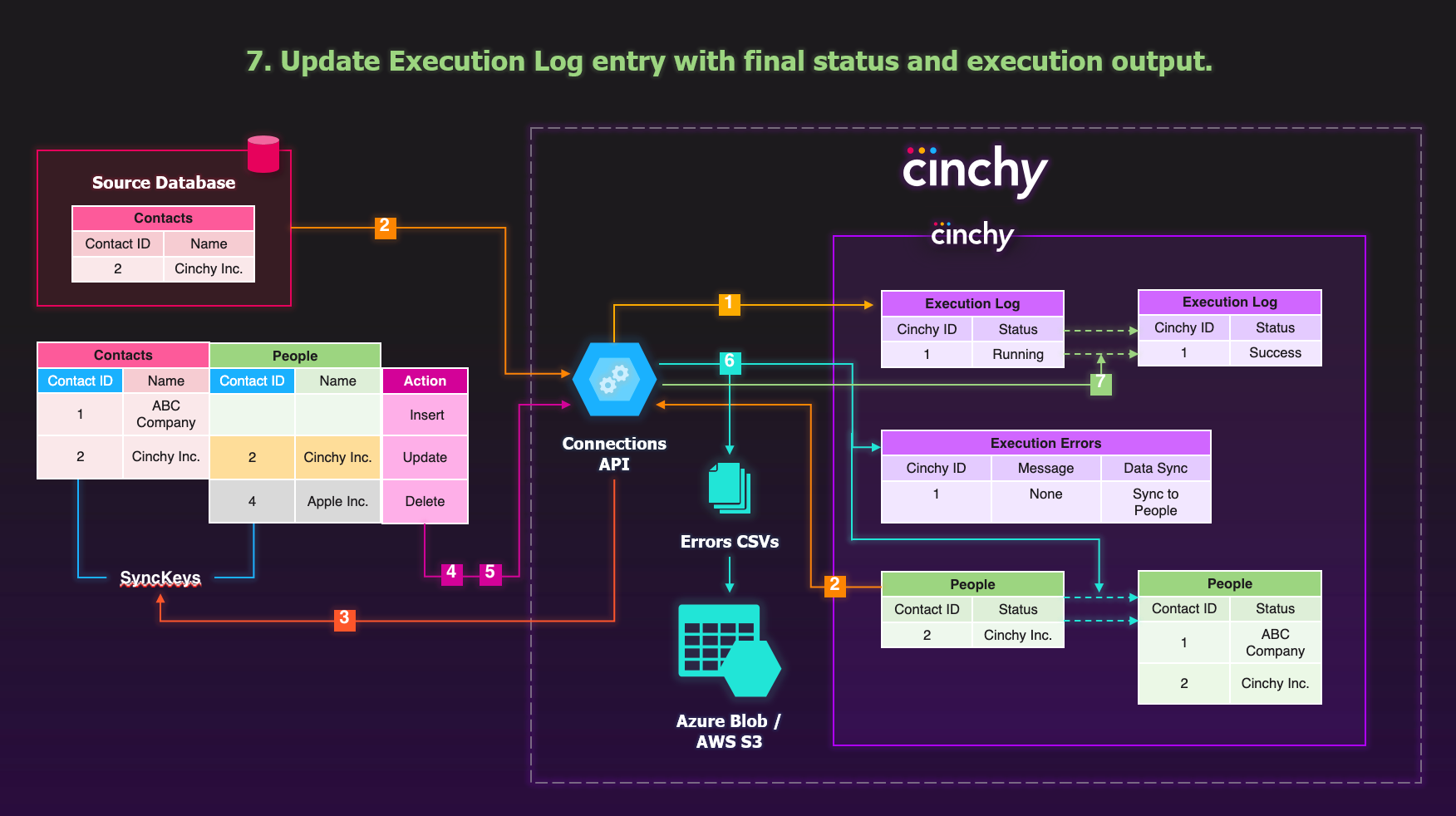

At a high level, running a batch data sync operation performs these steps (Image 1):

- The sync connects to Cinchy and creates a log entry in the Execution Log table with a status of running.

- It streams the source and target into the CLI. Any malformed records or duplicate sync keys are written to source and target errors CSVs (based on the temp directory)

- It compares the sync keys to match up source and target records

- The sync checks if there are changes between the matched records

- For the records where there are changes, groups them into insert, update, and delete batches.

- It sends the batches to the target and records failures in the sync errors CSV and Execution Errors table.

- Once complete, it updates Execution Log entry with final status and execution output.

- Step 1

- Step 2

- Step 3

- Step 4

- Step 5

- Step 6

- Step 7

Real-time data sync

In real-time syncs, the Cinchy Listener picks up changes in the source immediately as they occur. These syncs don't need to be manually triggered or scheduled using an external scheduler. Setting up a real-time sync does require an extra step of defining a listener configuration to execute.

Real-time sync is ideally used in situations where results and responses must be immediate.

For example, a document that's constantly checked and referred to should have the most up-to-date and recent information.

You can use the following sources in real-time syncs:

- Cinchy Event Broker/CDC

- MongoDB Collection (Event Triggered)

- Polling Event

- REST API (Event Triggered)

- Salesforce Platform Event

Execution flow

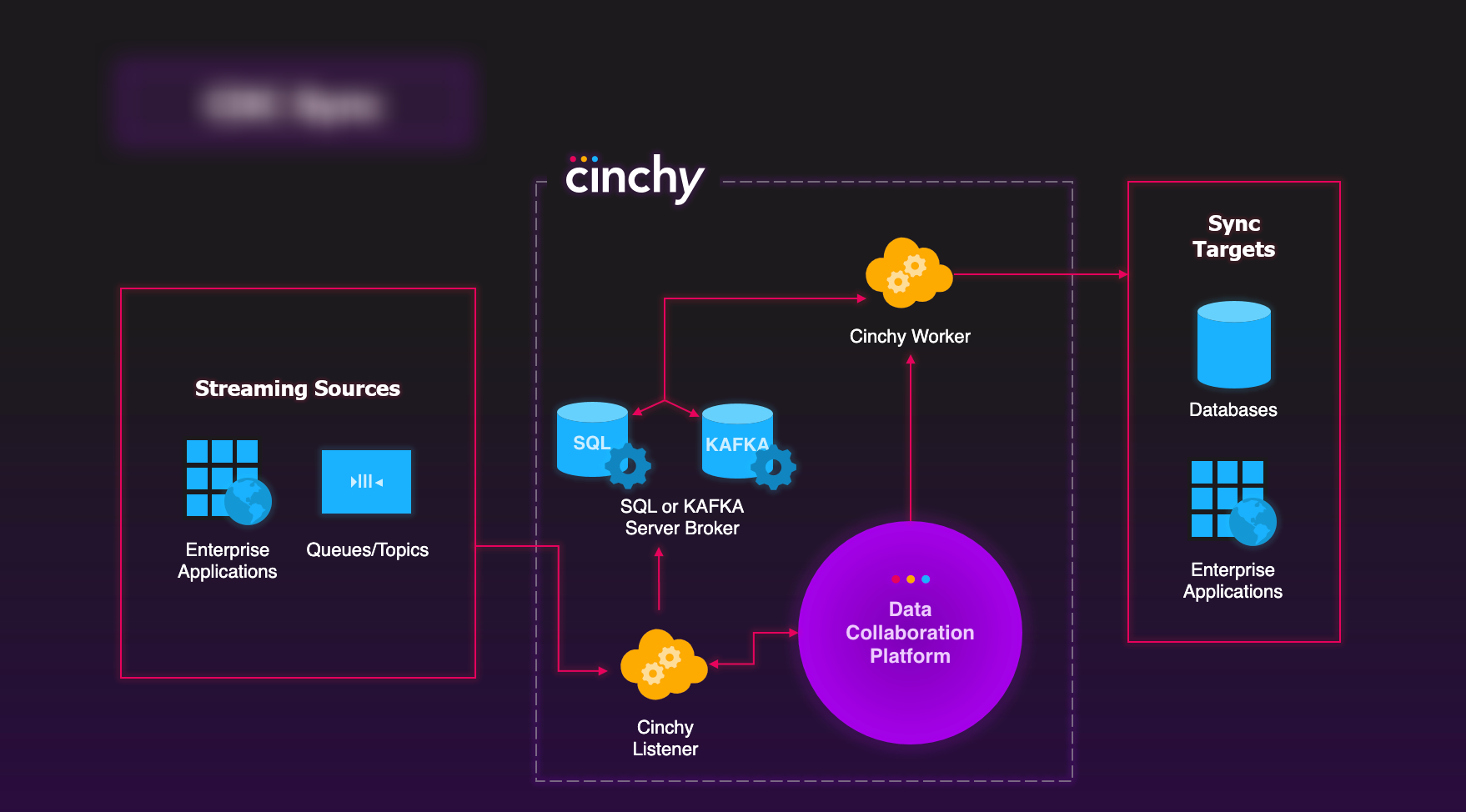

At a high level, running a real-time data sync operation performs these steps (Image 2):

- The Listener is successfully subscribed and waiting for events from streaming source

- The Listener receives a message from a streaming source and pushes it to SQL Server Broker.

- The Worker picks up message from SQL Server Broker

- The Worker fetches the matching record from the target based on the sync key

- If there are changes detected, the worker pushes them to the target system. Logs successes and failures in the worker's log file.