Snowflake table

Overview

Snowflake provides a single platform for data warehousing, data lakes, data engineering, data science, data application development, and secure sharing and consumption of real-time/shared data.

Snowflake enables data storage, processing, and analytic solutions.

The Snowflake Table destination supports batch and real-time syncs.

Loading data into Snowflake

For batch syncs of 10 records or less, single Insert/Update/Delete statements are executed to perform operations against the target Snowflake table.

For batch syncs exceeding 10 records, the operations are performed in bulk.

The bulk operation process consists of:

- Generating a CSV containing a batch of records

- Creating a temporary table in Snowflake

- Copying the generated CSV into the temp table

- If needed, performing Insert operations against the target Snowflake table using the temp table

- If needed, performing Update operations against the target Snowflake table using the temp table

- If needed, performing Delete operations against the target Snowflake table using the temp table

- Dropping the temporary table

Real time sync volume size is based on a dynamic batch size up to configurable threshold.

Considerations

- The temporary table generated in the bulk flow process for high volume scenarios transforms all columns of data type Number to be of type NUMBER(38, 18). This may cause precision loss if the number scale in the target table is higher

- Snowflake has a 20 statement concurrency limit. Kafka Topic isolation can be used for load management in syncs surpassing that maximum.

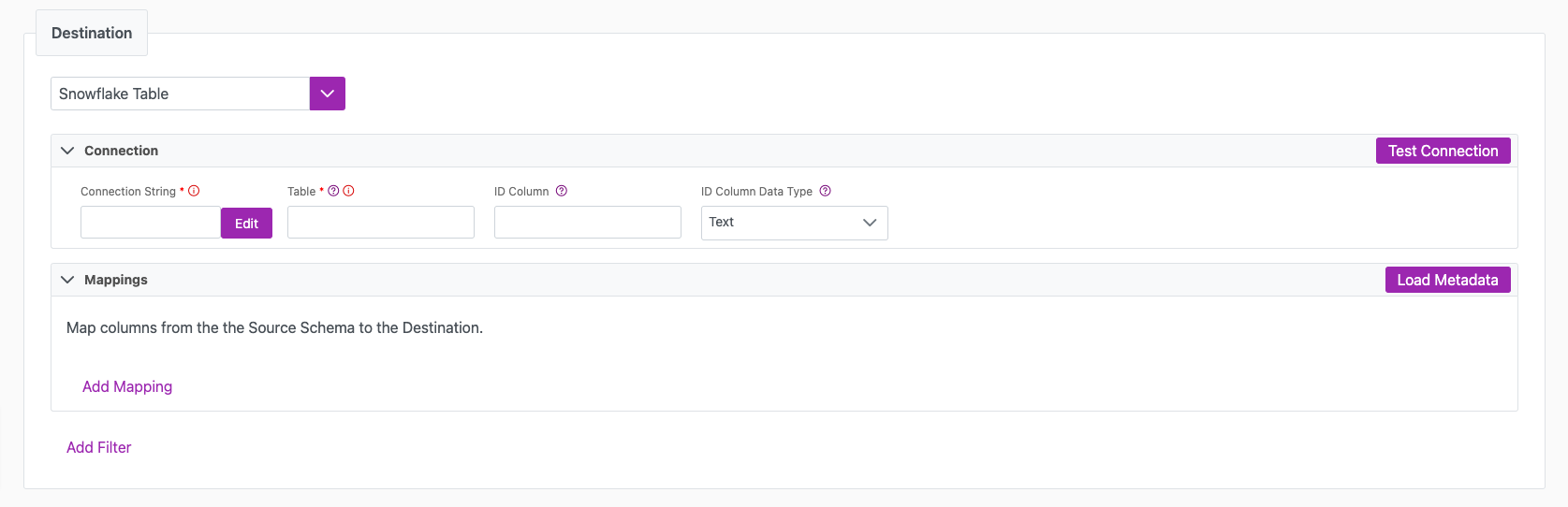

Destination tab

The following table outlines the mandatory and optional parameters you will find on the Destination tab (Image 1).

- Destination details

- Column Mapping

- Filter

The following parameters will help to define your data sync destination and how it functions.

| Parameter | Description | Example |

|---|---|---|

| Destination | Mandatory. Select your destination from the drop down menu. | Snowflake Table |

| Connection String | Mandatory. The encrypted connection string used to connect to your Snowflake instance. Note that the Snowflake driver was updated in Cinchy v5.15.1+, and it may be necessary to add PoolingEnabled=false to the connection string. | Unencrypted example using username/password: "account=wr38353.ca-central-1.aws;user=myuser;password=mypassword;db=CINCHY;schema=PUBLIC" Unencrypted example using a private key: "account=wr38353.ca-central-1.aws;authenticator=snowflake_jwt;user=myuser;private_key=<contents-of-private-key>;db=CINCHY;schema=PUBLIC" |

| Table | Mandatory. The name of the Table in Snowflake that you wish to sync. | Employees |

| ID Column | Mandatory if you want to use "Delete" action in your sync behaviour configuration. The name of the identity column that exists in the target (OR a single column that's guaranteed to be unique and automatically populated for every new record). | Employee ID |

| ID Column Data Type | Mandatory if using the ID Column parameter. The data type of the above ID Column. Either: Text, Number, Date, Boolean, Geography, or Geometry | Number |

| Test Connection | You can use the "Test Connection" button to ensure that your credentials are properly configured to access your destination. If configured correctly, a "Connection Successful" pop-up will appear. If configured incorrectly, a "Connection Failed" pop-up will appear along with a link to the applicable error logs to help you troubleshoot. |

The Column Mapping section is where you define which source columns you want to sync to which destination columns. You can repeat the values for multiple columns.

When specifying the Target Column in the Column Mappings section, all names are case-sensitive.

| Parameter | Description | Example |

|---|---|---|

| Source Column | Mandatory. The name of your column as it appears in the source. | Name |

| Target Column | Mandatory. The name of your column as it appears in the destination. | Name |

You have the option to add a destination filter to your data sync. Please review the documentation here for more information on destination filters.

Next steps

- Define yourSync Actions.

- Add in your Post Sync Scripts, if required.

- Define your Permissions.

- If you are running a real-time sync, set up your Listener Config and enable it to begin your sync.

- If you are running a batch sync, click Jobs > Start a Job to begin your sync.