Cinchy Event Broker/CDC

Overview

The Cinchy Event Broker/CDC (Change Data Capture) source allows you to capture data changes on your table and use these events in your data syncs.

- The Cinchy CDC will trigger on things like adding, updating, or deleting a cell/group of cells.

- The Cinchy CDC will trigger on row approvals, but not individual cell approvals.

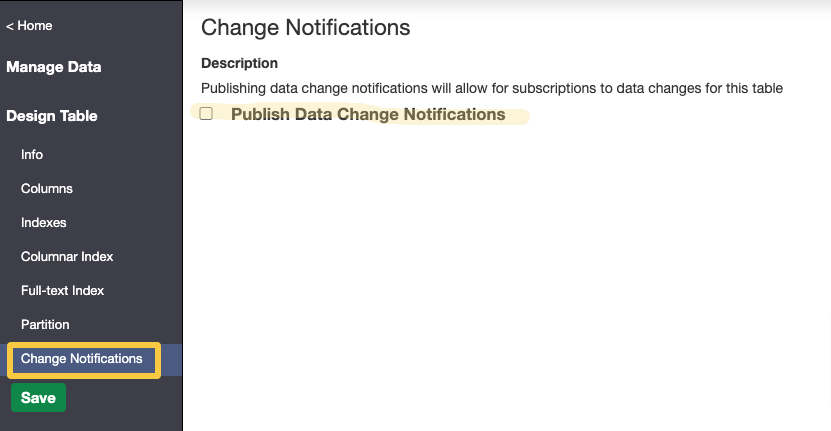

To listen to a table, the "Publish Data Change Notifications" capability must be enabled. You can do so via Design Table > Change Notifications.

Use case

To mitigate the labour and time costs of hosting information in a silo and remove the costly integration tax plaguing your IT teams, you want to connect your legacy systems into Cinchy to take advantage of the platform's sync capabilities.

To do this, you can set up a real-time sync between a Cinchy Table and Salesforce that updates Salesforce any time data is added, updated, or deleted on the Cinchy side. If you enable change notifications on your Cinchy table, you can set up a data sync and listener config with your source as the Cinchy Event Broker/CDC.

The Cinchy Event Broker/CDC supports both batch syncs and real-time syncs (most common).

Remember to set up your listener config if you are creating a real-time sync. In Cinchy v5.7+ this can be done directly in the Connections UI, however for multiple listener requirements you must still add additional configurations in the Listener Config table.

Info tab

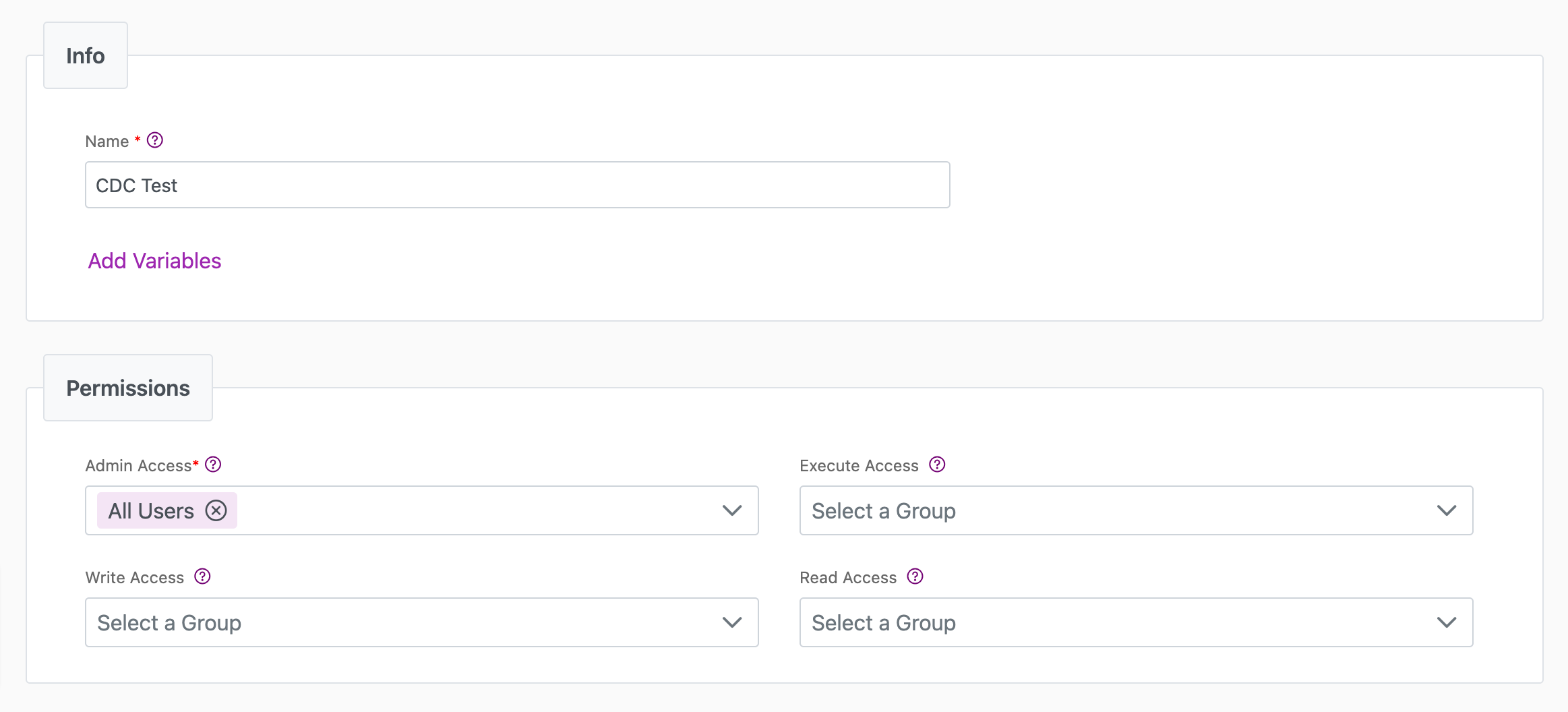

You can find the parameters in the Info tab below (Image 1).

Values

| Parameter | Description | Example |

|---|---|---|

| Title | Mandatory. Input a name for your data sync | CDC |

| Variables | Optional. Review our documentation on Variables for more information about this field. | |

| Permissions | Data syncs are role based access systems where you can give specific groups read, write, execute, and/or all the above with admin access. Inputting at least an Admin Group is mandatory. |

Source tab

The following table outlines the mandatory and optional parameters you will find on the Source tab (Image 2).

- Source Details

- Listener Configuration

- Schema

- Filter

The following parameters will help to define your data sync source and how it functions.

| Parameter | Description | Example |

|---|---|---|

| Source | Mandatory. Select your source from the drop-down menu. | Cinchy Event Broker/CDC |

| Run Query | Optional. If true, executes a saved query. Uses the Cinchy ID of the changed record as a parameter. See Appendix A for more details. | |

| Path to Iterate | Optional. Provides the JSON path to the array of items you want to sync. Applicable if your event message contains JSON values. |

To set up a real-time sync, you must configure your Listener values. You can do so through the Connections UI.

If there is more than one listener associated with your data sync, you will need to configure the addition listeners via the Listener Configuration table.

Listener parameters

| Parameter | Description | Example |

|---|---|---|

Table | The table to listen to. | Product |

filter | WHERE clause for filtering records. | New.[Is Valid] = 1 AND (New.[Is Excluded] = 0 OR New.[Is Excluded] IS NULL) |

messageKeyExpression | The messageKeyExpression parameter specifies a key that the listener application uses to route messages into specific topics within a Kafka broker. See below for more information. | value |

batchSize | Number of records read per request. | 1000 |

Return Columns | Choose whether to return data from all columns, or a selection. | Selection |

Return Columns - Selection: column | Column name to fetch from the table. | Cinchy Id |

Return Columns - Selection: alias | Alias for the column. | CinchyId |

Return Columns - Selection: deserializeJsonValue | Apply this if the column values from the source are JSON and you want to access nested attributes of those values when applying your schema. This can also be toggled via the Deserialize as JSON checkbox in the Connections UI. See Appendix A for more details. | true |

| Auto Offset Reset | Earliest, Latest or None. In the case where the listener is started and either there is no last message ID, or when the last message ID is invalid (due to it being deleted or it's just a new listener), it will use this column as a fallback to determine where to start reading events from. Earliest will start reading from the beginning of the queue (when the CDC was enabled on the table). This might be a suggested configuration if your use case is recoverable or re-runnable and if you need to reprocess all events to ensure accuracy. Latest will fetch the last value after whatever was last processed. This is the typical configuration. None won't start reading any events. You are able to switch between Auto Offset Reset types after your initial configuration through the process outlined here. | None |

Topic JSON example

The following is an example of how a topic JSON might look for an Event Broker sourced sync:

{

"tableGuid": "420c1851-31ed-4ada-a71b-31659bca6f92",

"fields": [

{

"column": "Cinchy Id",

"alias": "CinchyId"

},

{

"column": "First Name",

"alias": "Firstname"

},

{

"column": "Last Name",

"alias": "Lastname"

}

],

"messageKeyExpression": "CONCAT(New.[CinchyId], '-', New.[Name])",

"filter": "New.[Lastname] IS not null OR (New.[CinchyId] is not null)",

"batchSize": 100

}

Connection attributes

You don`t need to provide Connections Attributes when using the Cinchy CDC Stream Source.

If you're inputting your configuration via the Listener Config table, you must insert the below text into the column:

{}

The Schema section is where you define which source columns you want to sync in your connection. You can repeat the values for multiple columns.

| Parameter | Description | Example |

|---|---|---|

| Name | Mandatory. The name of your column as it appears in the source. | Name |

| Alias | Optional. You may choose to use an alias on your column so that it has a different name in the data sync. | |

| Data Type | Mandatory. The data type of the column values. | Text |

| Description | Optional. You may choose to add a description to your column. |

Select Show Advanced for more options for the Schema section.

| Parameter | Description | Example |

|---|---|---|

| Mandatory |

| |

| Validate Data |

| |

| Trim Whitespace | Optional if data type = text. For Text data types, you can choose whether to trim the whitespace._ | |

| Max Length | Optional if data type = text. You can input a numerical value in this field that represents the maximum length of the data that can be synced in your column. If the value is exceeded, the row will be rejected (you can find this error in the Execution Log). |

You can choose to add in a Transformation > String Replacement by inputting the following:

| Parameter | Description | Example |

|---|---|---|

| Pattern | Mandatory if using a Transformation. The pattern for your string replacement. | |

| Replacement | What you want to replace your pattern with. |

You have the option to add a source filter to your data sync. Please review the documentation here for more information on source filters.

Next steps

- Configure your Destination.

- Define yourSync Actions.

- Add in your Post Sync Scripts, if required.

- If more than one listener is needed for a real-time sync, configure it/them via the Listener Config table.

- To run a real-time sync, enable your Listener from the Execution tab.

Appendix A: Parameters

The following sections outline more information about specific parameters you can find on this source.

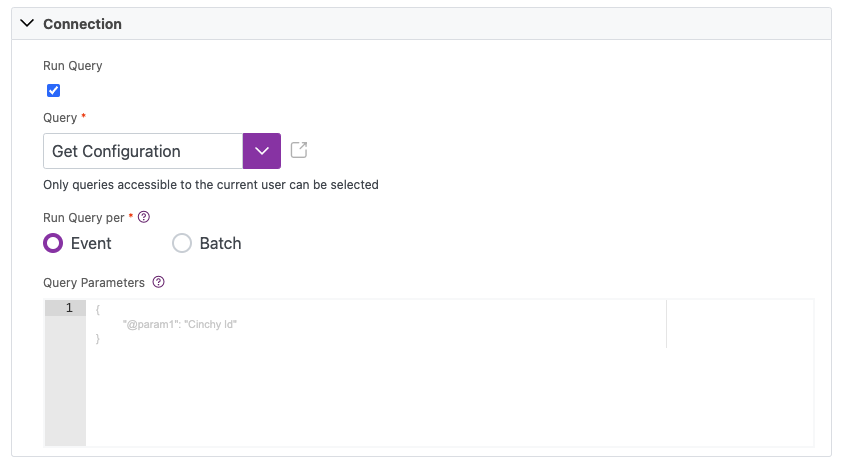

Run Query

The Run Query parameter is available as an optional value for the Cinchy Event Broker/CDC connector. If set to true it executes a saved query; whichever record triggered the event becomes a parameter in that query. Thus the query now becomes the source instead of the table itself. You are able to use any parameters defined in your listener config.

In Cinchy v5.14+, you are able to define whether Run Query executes as a Batch or as an Event. (Prior to v5.14, Run Query would always execute as Event.) For instance, in a sync where the worker collects a batch of 20 messages:

Using the Event flag: the specified query is run 20 times, once for each record in the topic batch.

Using the Batch flag: the specified query is run only once, for the entire topic batch at a time.

Example

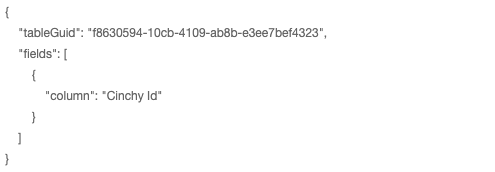

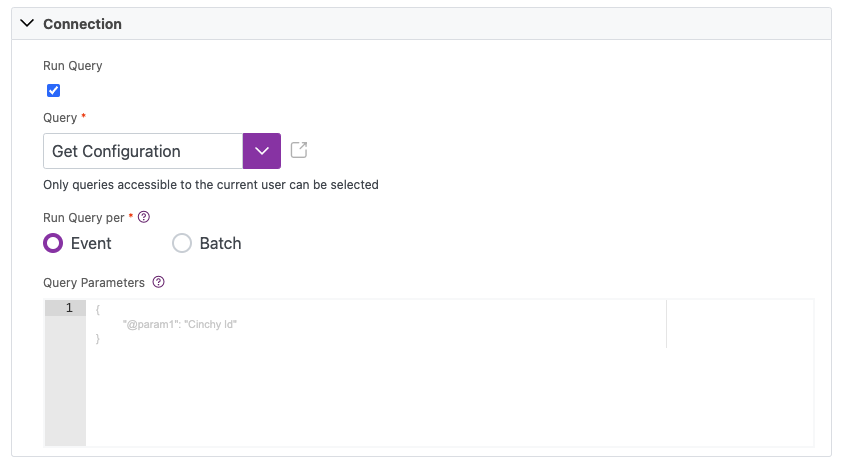

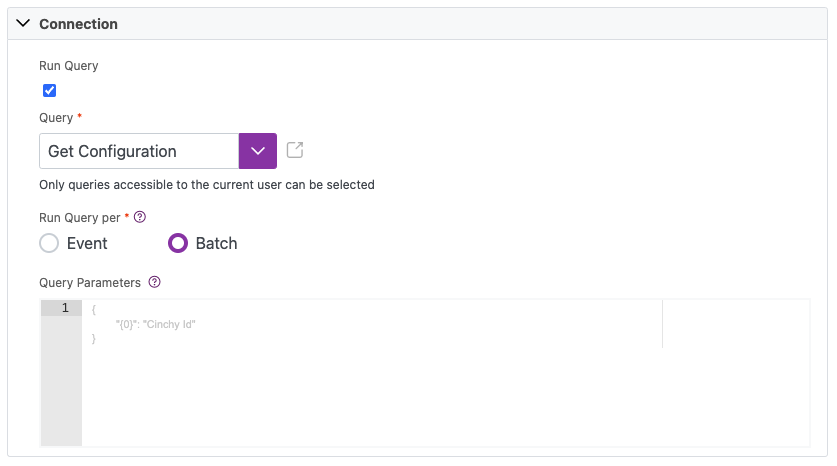

The example below is a data sync using the Event Broker/CDC as a source. Our Listener Config has been set with the CinchyID attribute (Image 4).

We can enable the Run Query function to use the saved query "Get Configuration" as our source instead. Note that the query is slightly different for a Batch vs Event query as @parameters do not work with IN statements.

// "Get Configuration - Event" Query

SELECT Name, [Cinchy Id]

FROM [HR].[Employees]

WHERE [Cinchy Id] = @param1

AND [Deleted] IS NULL

// "Get Configuration - Batch" Query

SELECT Name, [Cinchy Id]

FROM [HR].[Employees]

WHERE [Cinchy Id] IN ({0})

AND [Deleted] IS NULL

If we change the data in our source table to trigger the event, the query parameters below show that the Cinchy ID of the changed record(s) will be used in the query. This query is now our source.

Query parameters for Event execution:

- This is the default behaviour for platform version earlier than v5.14.

// Event Query Parameters

{

"@param1" : "Cinchy Id"

}

Query parameters for Batch execution:

- The parameter names must follow the convention 0, 1, etc.

- Parameter keys must be unique.

// Batch Query Parameters

{

"{0}" : "Cinchy Id"

}

In this case, the parameter in the query will be replaced with multiple values from a batch, and separated by a comma. In case where the batch has 20 messages, the query would be transformed to contain 20 comma separated Cinchy IDs:

SELECT Name, [Cinchy Id]

FROM [Test].[Example Table]

WHERE [Cinchy Id] = (1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20)

AND [Deleted] IS NULL

Deserialize as JSON

The Deserialize as JSON option is available when returning a selection of columns. Apply this if the column values from the source are JSON and you want to access nested attributes of those values when applying your schema. This can be toggled via the Deserialize as JSON checkbox in the Connections UI.

Example

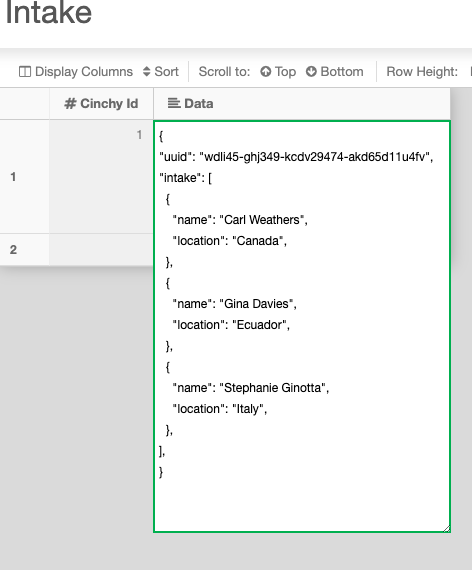

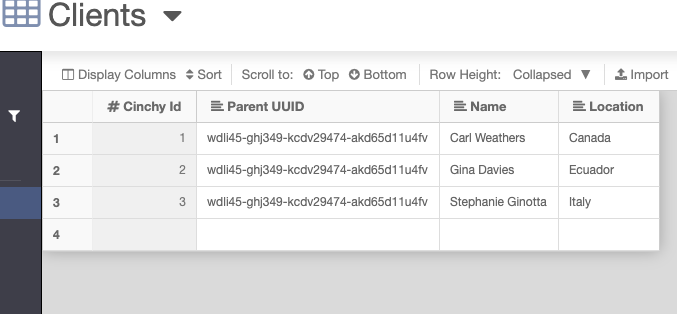

In the following example, the source table (Intake) is being synced into a target table (New Customers) via the Event Broker.

The data from the Intake table is in JSON format, and we want to deserialize it to access nested attributes of name and location:

{

"uuid": "wdli45-ghj349-kcdv29474-akd65d11u4fv",

"intake": [

{

"name": "Carl Weathers",

"location": "Canada",

},

{

"name": "Gina Davies",

"location": "Ecuador",

},

{

"name": "Stephanie Ginotta",

"location": "Italy",

},

],

}

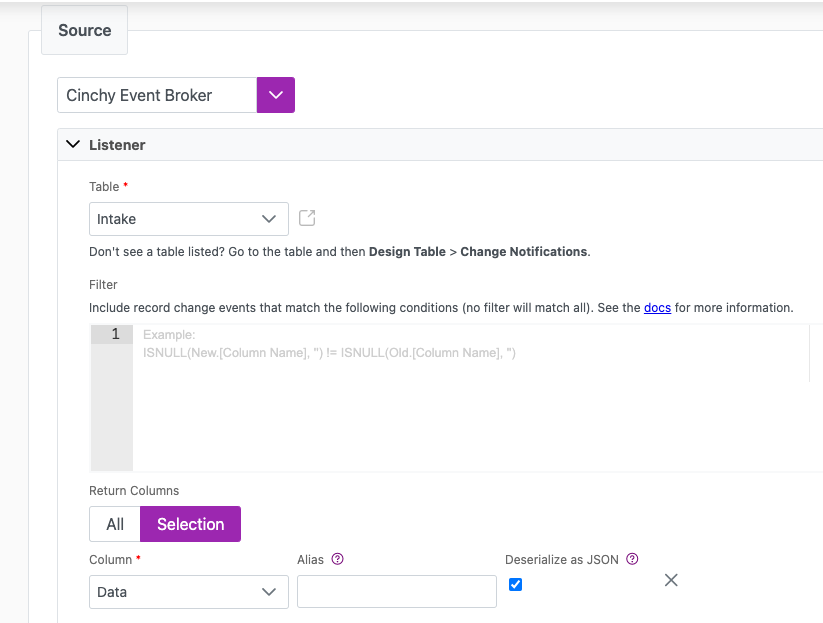

- Create your data sync using the following values:

- Source: Cinchy Event Broker

- Listener:

- Table:

Intake - Return Columns:

Selection - Column:

Data - Deserialize as JSON: True.

- Table:

- Listener:

- Source: Cinchy Event Broker

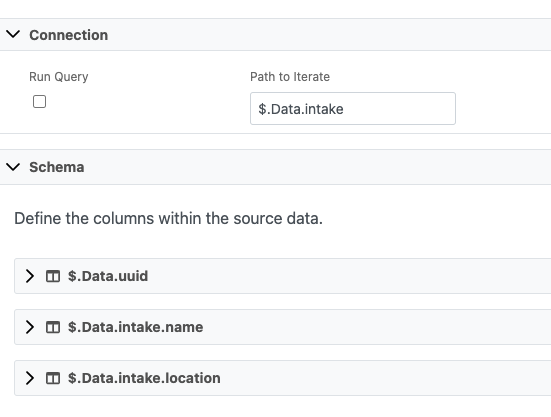

- Connection:

- Path to Iterate: This value should be

$+Source column name+name of array. For example:$.Data.intake.

- Path to Iterate: This value should be

- Schema: Define the parts of the array that you want to pick up. Ensure that you respect the array hierarchy when you define your column names. For example:

$.Data.uuid$.Data.intake.name$.Data.intake.location

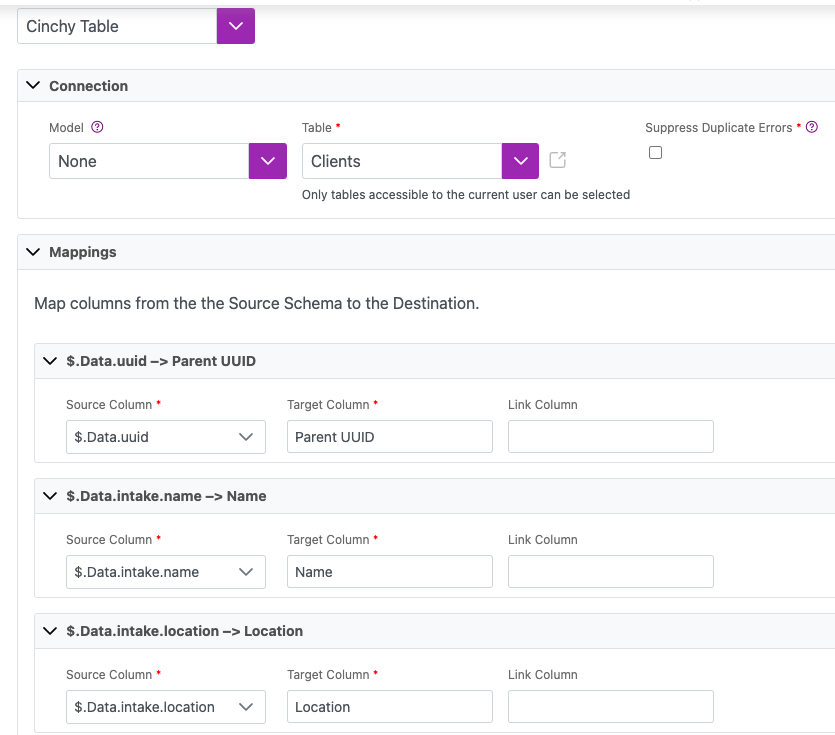

- Destination: Cinchy Table

- Connection:

- Table:

Clients

- Table:

- Mappings:

- Map your source and destination columns together. For example:

$.Data.uuid>Parent UUID$.Data.intake.name>Name$.Data.intake.location>Location

- Map your source and destination columns together. For example:

- Connection:

When you run the sync, the noted array values will be inserted into the destination table.

Appendix B: filters

Old vs new filter

The Cinchy Event Broker/CDC Stream Source has the unique capability to use "Old" and "New" parameters when filtering data. This filter can be a powerful tool for ensuring that you sync only the specific data that you want.

The "New" and "Old" parameters are based on updates to single records, not columns/rows.

"New" example:

In the below filter, we only want to sync data where the [Approval State] of a record is newly 'Approved'. For example, if a record was changed from 'Draft' to 'Approved', the filter would sync the record.

Due to internal logic, newly created records will be tagged as both "New" and "Old".

"filter": "New.[Approval State] = 'Approved'

"Old" example:

In the below filter, we only want to sync data where the [Status] of a record was 'In Progress' but has since been updated to any other [Status]. For example, if a record was changed from 'In Progress' to 'Done', the filter would sync the record.

Due to internal logic, newly created records will be tagged as both "New" and "Old".

"filter": "Old.[Status] = 'In Progress'