5.3 Release notes

Table of Contents

For instructions on how to upgrade to the latest version of Cinchy, see here.

New Connector

Kafka

We're continuing to improve our Connections offerings, and we now support Kafka as a data sync target in Connections.

Apache Kafka is an end-to-end event streaming platform that:

- Publishes (writes) and subscribes to (reads) streams of events from sources like databases, cloud services, and software applications.

- Stores these events durably and reliably for as long as you want.

- Processes and reacts to the event streams in real-time and retrospectively.

Event streaming thus ensures a continuous flow and interpretation of data so that the right information is at the right place, at the right time for your key use cases.

For information on setting up data syncs with Kafka as a target, please review the documentation here.

New Inbound Data Format for Connections

Apache AVRO

We've also added support for Apache AVRO (inbound) as a data format and added integration with the Kafka Schema Registry, which helps enforce data governance within a Kafka architecture.

Avro is an open source data serialization system that helps with data exchange between systems, programming languages, and processing frameworks. Avro stores both the data definition and the data together in one message or file. Avro stores the data definition in JSON format making it easy to read and interpret; the data itself is stored in binary format making it compact and efficient.

Some of the benefits for using AVRO as a data format are:

- It's compact;

- It has a direct mapping to/from JSON;

- It's fast;

- It has bindings for a wide variety of programming languages.

For more about AVRO and Kafka, read the documentation here.

For information on configuring AVRO in your platform, review the documentation here.

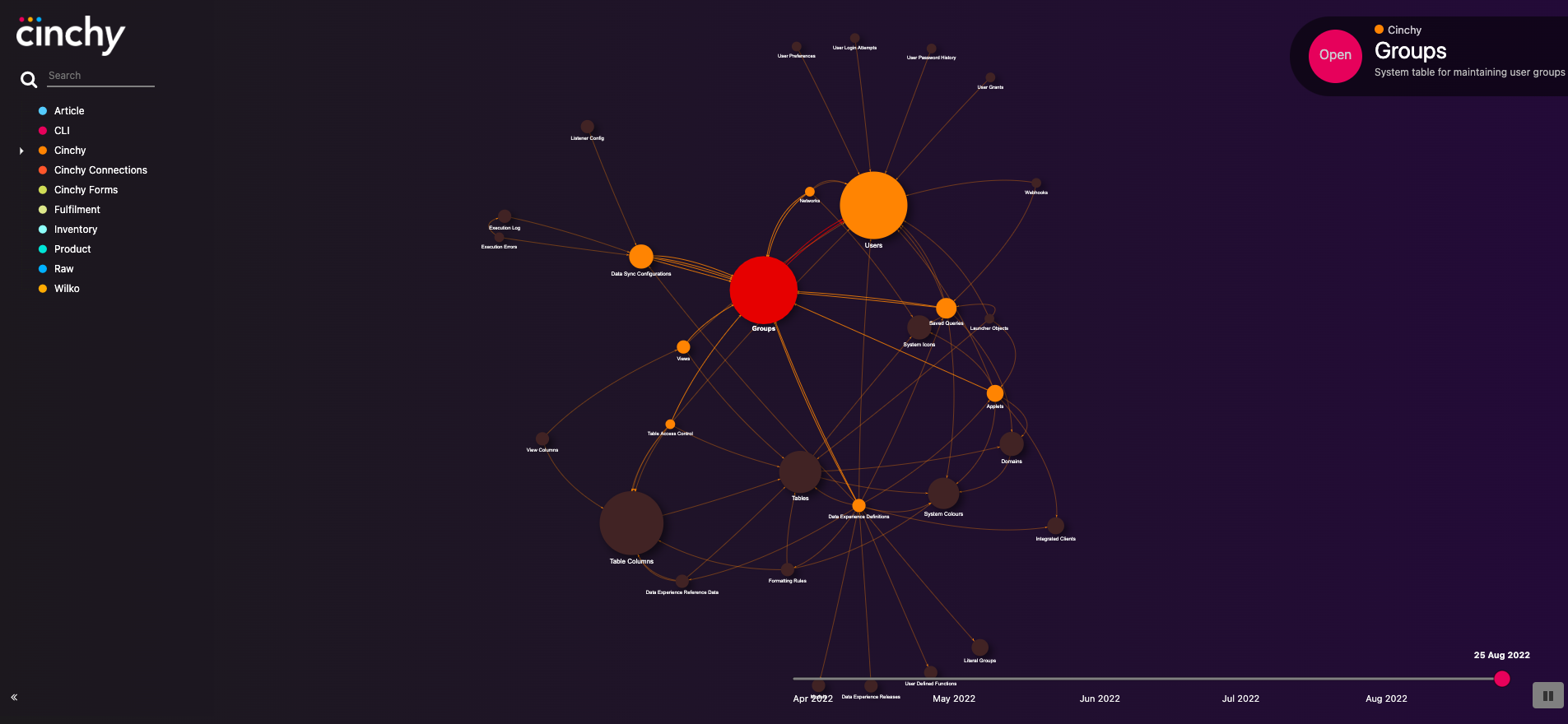

Custom Results in the Network Map

Focus the results of your Network Map to show only the data that you really want to see with our new URL parameters.

You can customize your Data Network Visualizer experience with the following optional parameters: targetNode, maxDepth, and depthLevel.

Example usage

<base url>/apps/datanetworkvisualizer?targetNode=&maxDepth=&depthLevel=

Parameters

-

Target Node: Sets the central node in the network based on the TableID number.

- To find the TableID, look in any table URL.

- Example:

<base url>/apps/datanetworkvisualizer?targetNode=8sets TableID 8 as the central node.

-

Max Depth: Limits the number of network hierarchy levels displayed.

- Example:

<base url>/apps/datanetworkvisualizer?maxDepth=2shows only two levels of connections.

- Example:

-

Depth Level: Highlights a specific network depth.

- Example:

<base url>/apps/datanetworkvisualizer?depthLevel=1highlights first-level connections, muting the rest.

- Example:

The below example visualizer uses the following URL: <base url>/apps/datanetworkvisualizer?targetNode=8\&maxDepth=2\&depthLevel=1

- It shows Table ID 8 ("Groups") as the central node.

- It only displays the Max Depth of 2 connections from the central node.

- It highlights the nodes that have a Depth Level of 1 from the central node.

Enhancements

- We've increased the length of the [Parameters] field in the [Cinchy].[Execution Log] to 100,000 characters.

- Two new parameters are now available to use in real time syncs that have a Cinchy Table as the target. @InsertedRecordIds() and @UpdatedRecordIds() can be used in post sync scripts to insert and update Record IDs respectively, with the desired value input as comma separated list format.

Bug Fixes

- We've fixed a bug that was preventing some new SSO users belonging to existing active directory groups from seeing tables that they should have access to.

- We've fixed a bug where a Webhook would return a 400 error if a JSON body was provided, and the key was in the query parameter of the HTTP request.

GraphQL

- We continue to optimize ourGraphQL beta capabilities by improving memory utilization and performance.